In today’s competitive landscape, data is not just a resource but it is the engine of strategic growth. For businesses to thrive, they must transform raw, disparate data into a reliable, single source of truth. This is the core mission of data engineering, and at the heart of this transformation lies the Data Build Tool (dbt). dbt empowers teams to build robust data pipelines and models with SQL, but the true value isn’t just in the transformation, it is in the assurance that the transformed data is accurate and trustworthy.

This is where the disciplined practice of unit testing in dbt becomes a critical differentiator. Testing is an essential part of building the modern data stack, ensuring that your data products are not only functional but also consistently high-quality.

Here’s how unit testing in dbt directly addresses these business challenges:

Mitigating Risk and Preventing Costly Data Incidents

Data pipelines are susceptible to logic errors and bugs. A seemingly minor miscalculation in a CASE WHEN statement or a faulty join condition can lead to a data model that produces incorrect key performance indicators (KPIs). Without proper validation, these issues can go unnoticed until they cause a data issue, forcing a costly and time-consuming data analysis.

By running through unit tests as part of a continuous integration/continuous delivery (CI/CD) pipeline, we can proactively catch issues during the development phase. This prevents bad data from ever reaching production, safeguarding downstream applications and business decisions from being compromised.

Accelerating Development and Optimizing Engineering Velocity

The traditional approach of running full data transformations on large datasets to test a small change is slow and resource-intensive. This creates a bottleneck that stifles innovation and delays the delivery of new data products.

Unit tests allow engineers to test a specific model’s logic in isolation, using a small, controlled set of mock data. This means a developer can validate a complex calculation in seconds rather than waiting for a full pipeline run that might take hours. This acceleration of the development lifecycle translates directly into a higher engineering velocity and a faster time-to-market for data-driven insights.

Cultivating Data Governance and Stakeholder Confidence

Data governance is the framework that ensures data is managed effectively throughout its lifecycle. A key pillar of this is data quality. The absence of rigorous data testing can cultivate distrust, compelling departments to establish their own isolated and unregulated data solutions.

Unit testing is a powerful tool for enforcing data governance standards. By explicitly defining and testing the expected behavior of a model, teams create a transparent and documented contract for that data product. This builds confidence across the organization, ensuring that the data in your BI dashboards and machine learning models is not only accurate but also consistent and auditable.

A Deeper Dive: How Unit Testing Works in dbt

Unit testing in dbt is a core component of a modern data quality framework. It’s about more than just checking for null values; it’s about validating the business logic embedded within your SQL transformations.

1. Unit Tests (The Logic Check) These tests verify your SQL logic works correctly by running it against “mock” (fake) data you define, before you ever touch the production warehouse.

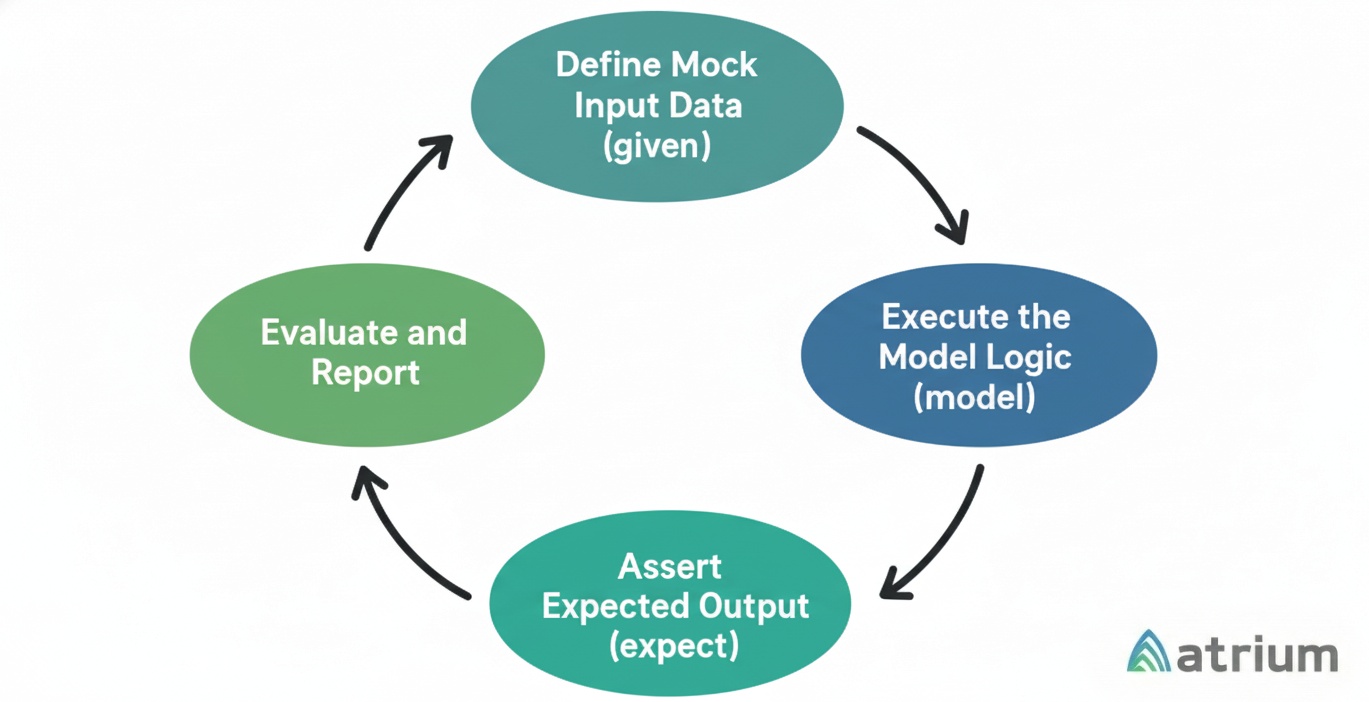

How Does It Work?

- Mock Input: You define the input data for one or more dependencies.

- Run the Model: dbt compiles and runs your model with the mocked input.

- Assert Output: You define what the correct output should look like.

- Test Passes/Fails: dbt compares the actual output to your expected output.

2. Data Tests (The Integrity Check) These run against your actual production data after the model is built to ensure the final dataset is healthy.

- Pipeline Integrity: Verifies that the data has arrived and is structurally sound after the run.

- Business Rules: Validates that the data values respect real-world constraints (e.g., no negative prices).

3. Native & Singular Tests Native tests are built-in checks for common errors, while Singular tests are custom SQL queries where any returned row indicates a failure.

- Schema Tests (Native): Pre-configured checks in YAML for standard issues like nulls (not_null) or duplicates (unique).

- Custom SQL Tests (Singular): Bespoke queries written to identify specific “bad data” rows defined by your team.

4. dbt-utils (The Community Toolkit) This is a package of pre-built, complex tests so you don’t have to write difficult SQL from scratch.

- Table Comparisons: Checks entire tables against each other, such as comparing dev vs. prod environments (equality).

- Advanced Patterns: Validates complex logic like non-overlapping dates (mutually_exclusive_ranges) or row-level logical expressions

Unlocking your data’s full potential: How Atrium helps

dbt Unit Testing is powerful, but it works best on a solid foundation. That is where Atrium comes in. We help you build modern platforms using the Data Vault method. This creates a flexible and scalable system that works perfectly with dbt, ensuring your data is not just tested, but truly built to last.

Our experts bring together the best of data strategy, engineering, and business acumen to ensure your data transformation is a success. Our services include:

- Strategic Data Vault Design: Assist you to architect a robust data model that not only meets your current needs but is flexible enough to adapt to future business changes.

- Best-in-Class dbt Implementation: We will work with your team to build and optimize your data pipelines, embedding best practices like dbt unit testing from day one.

- Empowerment and Training: We aim to empower your team with the skills and knowledge to own and evolve your data platform for long-term success.

Good business decisions rely on good data

If you are tired of fighting with errors, slow reports, and the risk of making the wrong choice, you need a better plan. At Atrium, we combine a data vault structure with dbt testing to make sure your data is always accurate and reliable. Reach out and let’s build a system you can actually trust.