Authors: Paul Harmon and Josh Fleischer

Interested in getting the most out of generative AI embedded in CRM? Many companies are. A recent study by McKinsey indicated that commercial leaders think organizations should be using machine learning and generative AI “often” or “almost always.” Salesforce recently announced a partnership with generative AI giant OpenAI, and rolled out a trio of solutions related to infusing Salesforce, Tableau, and Slack with generative AI solutions:

- Einstein GPT for sales and service

- Tableau GPT and Tableau Pulse

- Slack GPT

With all the interest surrounding generative AI, particularly within CRM, it’s important to understand some key considerations. Here is a deep dive into what we know about Einstein GPT in Salesforce, Tableau, and Slack, as well as next steps for your business.

Prompt engineering: What are you asking?

Before we get into the technology, let’s focus on a couple of key concepts that help make large language models (LLMs) easier to use. What have been the biggest challenges users have had when working with generative AI? Often, it comes down to a simple problem: not asking the right questions. This critical sub-field, called “prompt engineering,” is a burgeoning field in AI, and it centers on how best to interact with generative AI tools to produce optimal outcomes.

Language often boils down to two things:

- What you are saying

- How you are saying it

LLMs often excel at the how part. They can produce output of varying lengths, styles, or vernaculars. Heck, if you want a sonnet about this quarter’s KPIs written in Shakespearean pentameter, LLMs can do it. However, the what component is the more challenging part of the equation. This part, driven by the prompts, is where LLMs can run into trouble with problematic behaviors like hallucination (i.e., where they confidently produce false output) or producing irrelevant responses. The what component is typically best solved with prompt engineering.

To frame the core problem solved by prompt engineering, think about classic stories about people who find a genie in a magic lamp. Often, they rub the lamp and a genie comes out, promising to grant them wishes. Of course, there’s usually a catch — the person who wishes for something ends up with an unexpected outcome they hadn’t considered, because they weren’t careful with their language when they made the wish.

Interactions with generative AI tools can be thought of in a very similar manner. Knowing how best to ask the right questions of the tool leads to better responses, and can help mitigate some of the biggest downsides of these tools, such as hallucination. In many cases, using data to provide specific information to input into a prompt can lead to a much more personalized, valuable response.

Fine-tuning generative AI models

A critical difference between generative AI tools and other existing models in AI is that generative models are typically pre-trained on a massive corpus of data, and are built to be deployed “out of the box” rather than trained from scratch. However, in cases where there are needs for highly personalized outputs, and prompt engineering isn’t enough to drive them, a process called “fine-tuning” can be used to augment the pre-trained models with existing organizational data or prompts with expected responses.

When fine-tuning generative AI models, you typically need to prepare a set of data that includes a list of potential prompts with the resulting feedback you would expect the LLM to output. Fine-tuning, in a sense, is like “training” the generative AI on specific data. It provides an additional ability for generative AI to produce outputs in a much more specific style.

It is important to know that not all generative AI tools can be fine-tuned; this is actually an important consideration when choosing which generative AI to use. But when necessary, fine-tuning can help generative AI to produce much more specific, purpose-built content tied to your data.

Interfaces that bring it all together

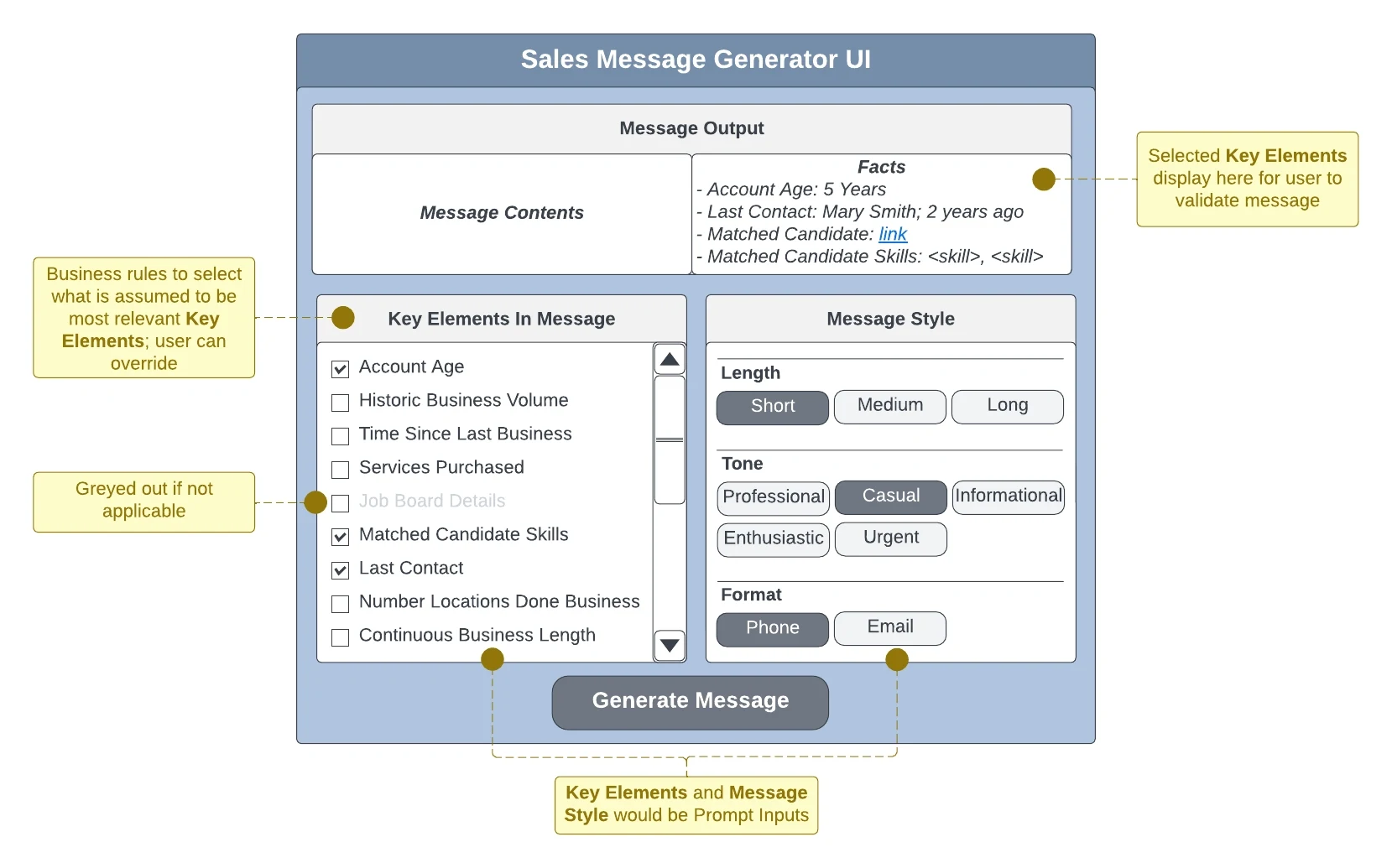

How might someone use generative AI in Salesforce? Building seamless UIs that allow users to take advantage of LLM functionality without directly entering prompts may be the best way to go.

The image above shows an example of this type of interface, which could be built directly into a lightning web component or other functionality in Salesforce. On the left, a user can manage the information that is fed into a prompt (and much of this information, or more, could be leveraged from Data Cloud or core Salesforce data, if necessary) in order to control the content of a message. On the right, buttons control the style of the message generated. When using generative AI, it’s always a good idea to check that results match known data; surfacing key facts about a record is a good idea to validate components of a message.

Interfaces like this can be custom generated, but many of these same concepts will underlie functionality available in Einstein GPT. (Discussed in the next section.)

Einstein GPT for sales and service

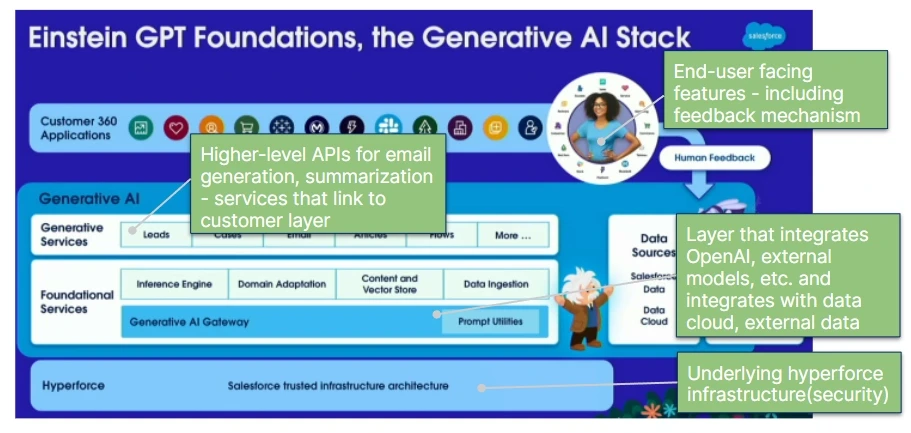

Einstein GPT in Salesforce will take advantage of multiple layers that allow for a customized experience and functionality. The “foundational layer” that underlies Einstein GPT will provide for integration with OpenAI LLMs such as GPT-4 and ChatGPT, while also allowing for custom integrations with other generative AIs. This allows an organization using Salesforce to leverage various generative AI tools to improve the functionality of tools embedded in the system that rely on generative AI.

For the end user, the applications using generative AI can reference the tools directly, or can be designed so that end users have a seamless experience, all moderated by the Generatives Services layer that marries the technical back-end with the front-end applications. Our recommendation is that front-end applications should be designed so that end users do not have to enter prompts directly into a LLM like ChatGPT. Rather, they should be able to click on buttons that generate reproducible prompts and controllable, consistent output. By taking the prompt engineering challenges out of the hands of end users, the variability and complexity of producing output can be greatly simplified.

We anticipate that much of the early functionality in both Sales and Service GPT will be out of the box. However, it’s likely that these tools will become more flexible and customizable over time. Custom solutions leveraging Einstein GPT for sales and service will leverage the same concepts as a custom implementation, including prompt engineering, potential fine tuning, and choice of LLM. The latter is an important consideration because each LLM is structured a little bit differently, so asking a question to ChatGPT and Google’s Bard may result in very different outputs. As organizations think about building tools that leverage multiple LLMs in Salesforce’s Foundational Services layer, they may need to think about different ways to navigate inputs and outputs from different systems.

Tableau GPT and Tableau Pulse

Even more recently, Tableau unveiled two tools that will be powered by generative AI: Tableau GPT and Tableau Pulse. The tools promise to reimagine data analysis in Tableau by allowing users to prompt with a question, and then see resulting visualizations, along with making a more seamless experience for interacting with data for all members of an organization (not just data experts). Tableau GPT and Tableau Pulse take some of the hardest parts of working with data — building and interpreting charts — and make both activities easier by providing end users with a streamlined way to create views of their data without needing to dig into technical code/interfaces, and help to highlight the insights that Tableau uncovers.

Slack GPT

Because Salesforce’s generative AI connectivity is built into the entire platform, this functionality can extend to Slack as well. Slack GPT allows for increased productivity based on two core concepts:

- Automated message generation

- Automated summarization of messages

Much like the example we showed earlier, Slack GPT will make it easier for users in Slack to make changes to the style and content of messages, helping them to make tweaks to drafts and reproduce similar messages for different audiences. The second use case might be even more important; by allowing users to summarize chat threads or messages, they can distill entire conversations into easily digestible pieces.

Next steps and considerations for generative AI embedded in CRM

Generative AI is rapidly shaking up business operations as we know them, and what’s true today may change tomorrow. New technologies are rapidly accelerating, and it can be overwhelming to know what is possible and what’s not.

At Atrium, our goal is to help customers to better understand how they can leverage tools like generative AI, Einstein GPT, Tableau GPT and Pulse, and others to drive real, actionable business value. Curious about how you can leverage these tools, or want to understand more about how they work? Watch this quick video and see if a personalized generative AI session is right for you:

Reach out and let’s discuss your goals with generative AI and use cases for your business.