When people discuss artificial intelligence it is usually through the lens of a hypothetical, hyper-advanced, future state of AI — much like a sci-fi movie they watched where machines take over the world.

But while we aren’t quite there — and really, let’s hope we never get there — AI does go beyond “yes/no, if this/then that” scenarios. And with machine learning, bias can come into play. So what is bias, how do we recognize it, and how do we counteract bias to steer toward ethical AI, specifically in the field of machine learning?

Instead of discussing the far, far, far away future state of humanoid machines, let’s focus on discussing real-world scenarios where AI is currently used, what that means, and how to act appropriately.

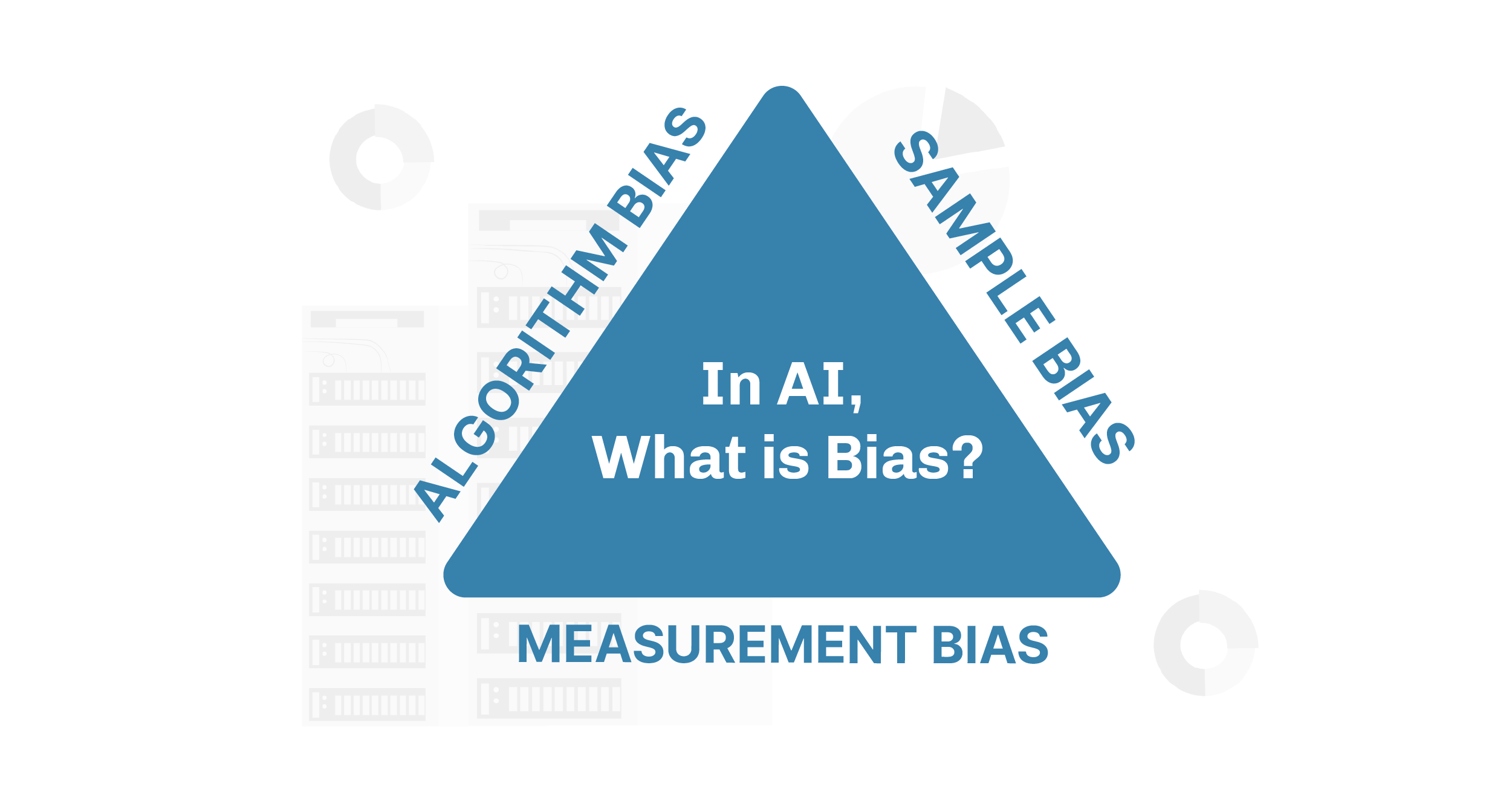

In AI, What is Bias?

Bias is any type of systemic distortion that applies to the analysis and modeling process. Bias can arise from the model mechanics, or be captured in data. Some biases may be related to the design of a model, but not all. There are several types of bias:

- There is algorithm bias, in which we must assume that those who designed an algorithm or overall mechanics of a model bring assumptions and patterns from their worldview into its design.

- We often observe sample bias, in which data are skewed in a way that is driven by the methods of capturing or selecting data.

- Measurement bias is typically used to describe faulty sensors or measurement devices, but measurement isn’t always the objective. In many cases, measurements can depend on the views and judgment of individuals recording data.

All of these biases can be affected by the perspective of those that take part in determining the way a prediction model is built, the way data is recorded, as well as how it is selected. So even if it were possible to control for biases in designing models, and if mechanics and data selection were flawless and fair, the simple and unfortunate truth is that what has been recorded may well be biased and is invariably captured in the data.

Biases are very similar to the patterns machine learning uses to inform predictions. They are characterized by ethical considerations, meaning a pattern with a biased, discriminatory character is relevant to ethical decision making. Not all patterns are relevant to ethical decision making, and it is up to us who train the models to determine which patterns are biased and which are not.

Real-World Examples of Bias

Examples of bias misleading AI and machine learning efforts have been observed in abundance:

- It was measured that a job search platform offered higher positions more frequently to men of lower qualification than women. Maybe companies didn’t necessarily hire these men, but the model had still led to a biased output.

- An algorithm that was designed to predict how likely a convicted criminal was to commit further crimes raised a valuable discussion when it was questioned whether sample selection and confounding factors such as postal codes had led to a prediction biased by race and socioeconomic status.

- On Facebook, users’ news feeds were filled with the kinds of things they would be likely to read anyway, and therefore would famously echo their own views, rather than offering different perspectives.

While all three of these examples represent bias, only the first two are relevant to the type of ethical decision making in question. Of course, echoing peoples’ own views in the news and media they’re presented is questionable and should be analyzed. But the bias, in this case, is not discriminatory in the way the other two are. Hiring practices and criminal prosecution are areas infamously riddled with racist and sexist bias, putting them in an entirely different category for ethical considerations.

What’s Unethical?

The question of what is and isn’t ethical can be raised when we are aware of the reasons for our predictions. In our model development process, we have insight into what patterns are driving outcomes. This is where we have the opportunity to take action to counteract biases that will affect groups that have been discriminated against, so as to avoid them falling victim to this discrimination again.

When biased training data leads to a biased model, we perpetuate discriminatory patterns that have occurred in the past. It is the responsibility of everyone involved in creating and managing predictive models to counteract these biases.

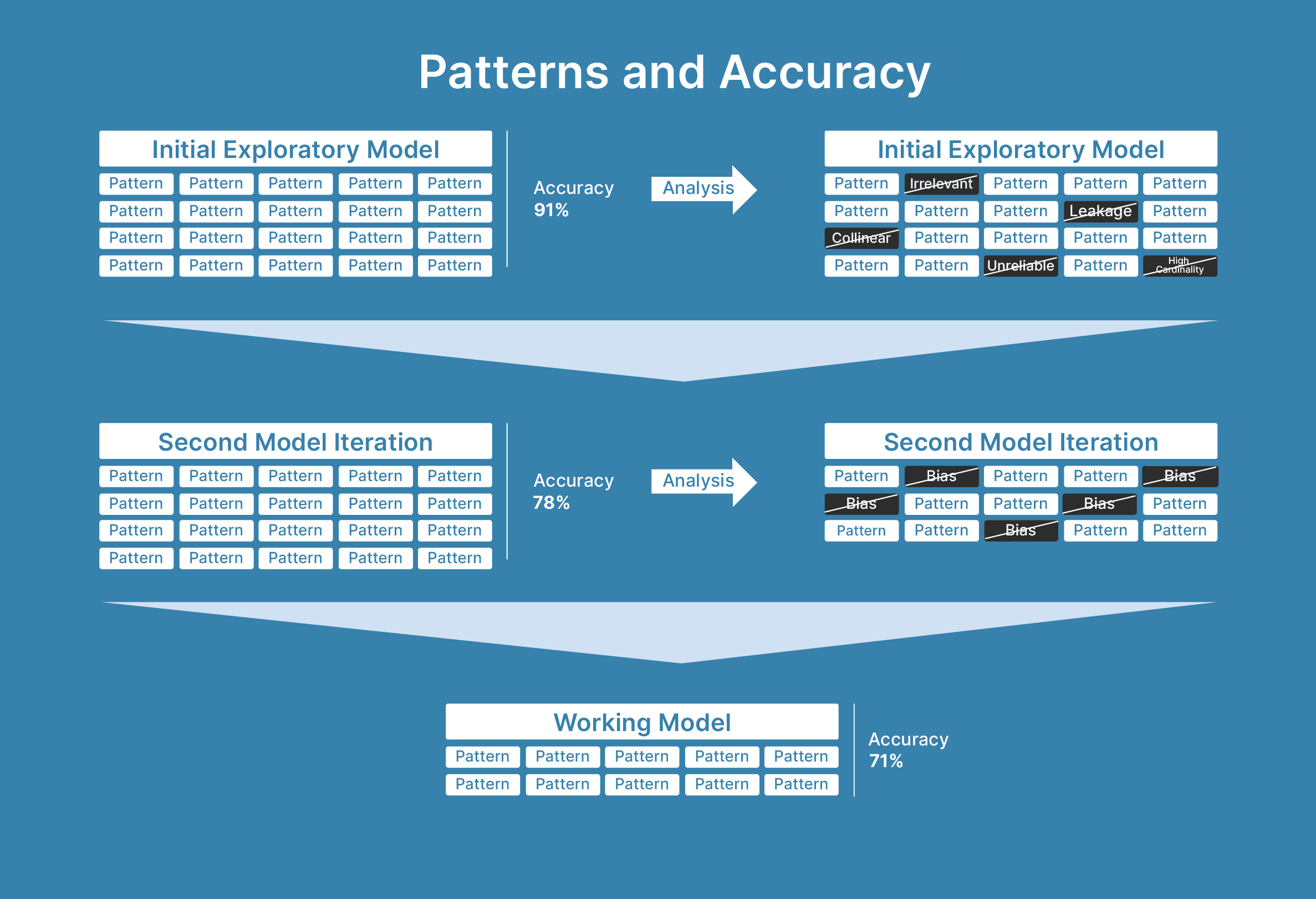

Accuracy is typically used as a measure of how valuable a model will be. Since accuracy depends on the reliability of patterns, that also means a highly biased dataset will appear to be more accurate. When considering the ethical implications of biased patterns, we have to forgo some accuracy so as not to continue to propagate the biases. (See the image below.)

What Does Ethical AI Mean to Atrium?

It’s as easy to hope technology will aid us in alleviating the issues we face as a society as it is to fear that it will magnify them. On a fundamental level, we understand it as our responsibility to surface insights from the data that are relevant to ethical decision making. Our goal is not always to suggest an ethically “correct” path, but to ensure that customers are aware of the implications of the algorithms and data at hand.

During the process of using data to train a machine learning prediction engine, we are looking for patterns to support our predictions. If any of those predictions rely on data that could potentially be discriminatory, we will surface that bias for discussion. The next question then becomes: who will be involved in the discussion to achieve fair results?

The purpose of these considerations is to identify and document this bias as it has surfaced and what decisions were made around it. Beyond that, throughout the lifetime of a model, in order to counteract its natural degradation, we employ tools and analysis to further understand the patterns that currently drive it, as well as identify other factors that may be influencing the outcomes. The ethical implications of patterns that are surfaced will be considered at each phase of the model’s life cycle. Our aim is to identify and document discriminatory bias in the initial planning and design, as well as throughout the lifecycle of the model as it’s maintained.

Turning Bias into an Opportunity for Change

The consequences of accepting discriminatory bias in predictive models are further perpetuating historical discrimination, conducting business in a way that negatively affects those that we are making predictions about without their knowledge, and ultimately, deepening discriminatory bias in an ongoing manner. At Atrium, it’s important for us to uphold our own values and ensure these negative consequences are subverted rather than perpetuated.

In order to counteract the effects caused by bias, companies can make decisions on the kinds of biases they refuse to incorporate into their decision making for predictions. The inflection point begins with Atrium surfacing the biases to our customers — at which point it is our responsibility to highlight a responsible path moving forward.

It is necessary to surface potentially discriminatory patterns for discussion in order to take action to perpetuate change rather than bias. The groundwork for creating ethical AI in its hypothetical, hyper-advanced future state begins today in the most minute components of automation.

Learn more about Atrium’s services and our AI expertise.